Deep Fakes: India's Battle Against Synthetic Realities

Deep fakes demand robust legal framework, proactive governance and global collaboration for risk mitigation, safeguarding rights, and societal trust.

By Advocate Suresh Tripathi |Published: 2023-12-05

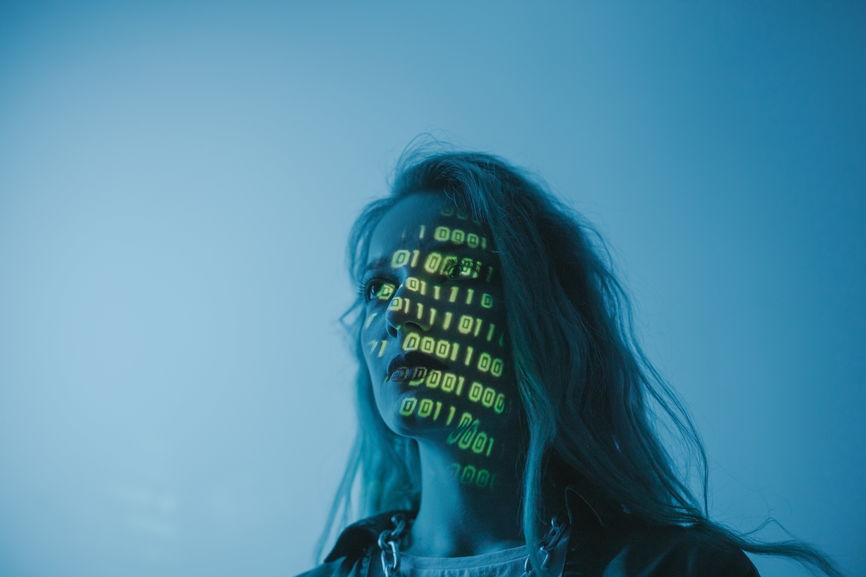

Looming threat of deep fake audio, images and videos have become a great concern and can create a lot of problems for everyone due to its potential to create convincing and misleading content.

It is important for us to understand what are deep fake technologies, what are the risks posed by it and what are the Indian Laws and policy responses being considered today by the Indian government around misuse and abuse of deep fakes.

Understanding Deep Fake Technology:

Deep fakes are a form of manipulated content that utilize deep learning technologies, a subset of machine learning and artificial intelligence. These technologies make it possible to automatically synthesize realistic fake audio, images, and videos. Deep fake videos can be categorized into three types: face swaps, lip syncs, and puppet master creations.

- Face Swap: Involves placing one person's face onto another person's body.

- Lip Sync: Modifies the source video to make the mouth region consistent with arbitrary audio recordings.

- Puppet Master: Involves animating a target person's movements and expressions by performers, creating a completely synthesized video.

Risks Posed by Deep Fakes:

- Social Trust Impact: Deep fakes have the potential to erode social trust by presenting a partial or complete fiction that is highly persuasive.

- Individual Harms: Can lead to personal harm, especially for public figures and individuals from marginalized groups.

- Misinformation and Manipulation: Can be used for spreading misinformation, impacting elections, and manipulating public perception.

- Potential for Abuse: May be used for revenge, objectification, and entertainment at the expense of individuals.

Indian Laws and Policy Responses:

Current Laws: Existing civil and criminal laws in India are limited in addressing deep fake issues. The section 66C of the Information Technology Act says that the punishment for identity theft is "whoever fraudulently or dishonestly makes use of the electronic signature password or any other unique identification feature, be liable for punishment with the imprisonment of a term which may extend to 3 years”.

The Digital Data Protection Act, still not in effect, is criticized for exempting publicly shared data from protection. This exemption conflicts with privacy rights established in judicial rulings.

Enforcement Challenges: Challenges exist in the enforcement of laws, with a burden on victims to register complaints and approach social media companies for content takedown.

Government Response: The Indian government's response has been criticized as relying on existing laws, which are deemed insufficient. Empty rhetoric and a lack of proactive measures are noted.

International Approaches:

Europe: The European Union is considering the AI Act, proposing the classification and regulation of different AI applications based on risks and harms.

United States: The U.S. has passed an executive order focusing on safe and trustworthy AI development. It aims to establish standards for detecting AI-generated content and authenticating official content.

Conclusion:

The threat of deep fake technology requires comprehensive legal frameworks, proactive government responses, and international collaboration to mitigate risks and protect individuals' rights and societal trust. The current legal landscape in India is considered inadequate, necessitating a re-evaluation and enhancement of policies.